If you’re like me, you’ve started noticing a shift in the way people are searching for companies and brands. It’s no longer just about Google. More and more often, users type “What’s the best project management tool?” or “Tell me about Company X” directly into ChatGPT, Gemini, and other large language models (LLMs). As someone who has followed brand reputation for two decades, I’ve seen how answers from LLMs can boost (or bruise) a company’s digital profile, often based on unknown or even incorrect data.

In this article, I want to share the key questions I always ask before I trust any insight about my brand from a language model. There’s gold in their answers, but also risk—and I’ve seen firsthand how a single flawed AI response can influence buyer perceptions for months.

Why the questions matter

Before jumping in, let me tell you: LLMs are remarkable, but they’re not infallible. Recent research in fields as rigorous as medicine shows LLMs are powerful, but can still “hallucinate” and get basic facts wrong (Journal of Medical Internet Research). When insights from AI models feed decisions on PR, marketing, or customer engagement, asking the tough questions is not just smart—it’s unavoidable.

Are you sure what AI says about your brand is true?

Let’s break down the eight questions I keep on hand.

1. Where did the model get its information?

Whenever I see an LLM’s response about my brand, my first move is simple: trace the source. While some models cite their references, others don’t—and that’s a problem. If an LLM can’t tell you where a fact comes from, you cannot check its reliability. Studies examining LLM citations for systematic reviews found hallucination rates as high as 39.6% in certain models (Journal of Medical Internet Research).

When I use a platform like getmiru.io, I rely on citation analysis to clarify what is fact-based and what is pure guesswork. That’s essential for actionable insights.

2. How current is the referenced data?

What the AI says about your features, pricing, or press releases could be months or even years out of date. LLMs are only as updated as their last training set or search, which means you might read opinions or hard facts from 2022 or earlier. This lag can sway impressions—and not always in your favor.

I learned this the hard way after a website redesign wasn’t reflected in model answers for ages. For those who care about digital reputation, I recommend monitoring LLM responses alongside their source dates.

3. Is the information consistent across LLMs?

In my experience, different LLMs sometimes say wildly different things about the same brand. A study on LLM accuracy for medical topics showed model reliability varied by over 30% between generations (Pharmacoepidemiology and Drug Safety). The same split can apply to business context.

- One model might correctly describe your latest product update.

- Another sticks to an outdated description.

- A third could mix up competitor capabilities and yours.

Comparing multiple model outputs is a habit I’ve adopted, and services like getmiru.io make side-by-side checks much easier.

4. Are there signs of hallucination or fabrication?

I’ve read LLM responses that invented product features, changed pricing, or referenced non-existent partnerships. This isn’t rare. In language-judgment tasks, some models performed at “chance” levels, indicating a real risk of made-up claims (Proceedings of the National Academy of Sciences).

Unusual, very specific, or overly positive/negative statements are often a sign the model is “hallucinating.” Whenever I spot new claims about my company, I double-check: did we actually say that, or is AI making it up?

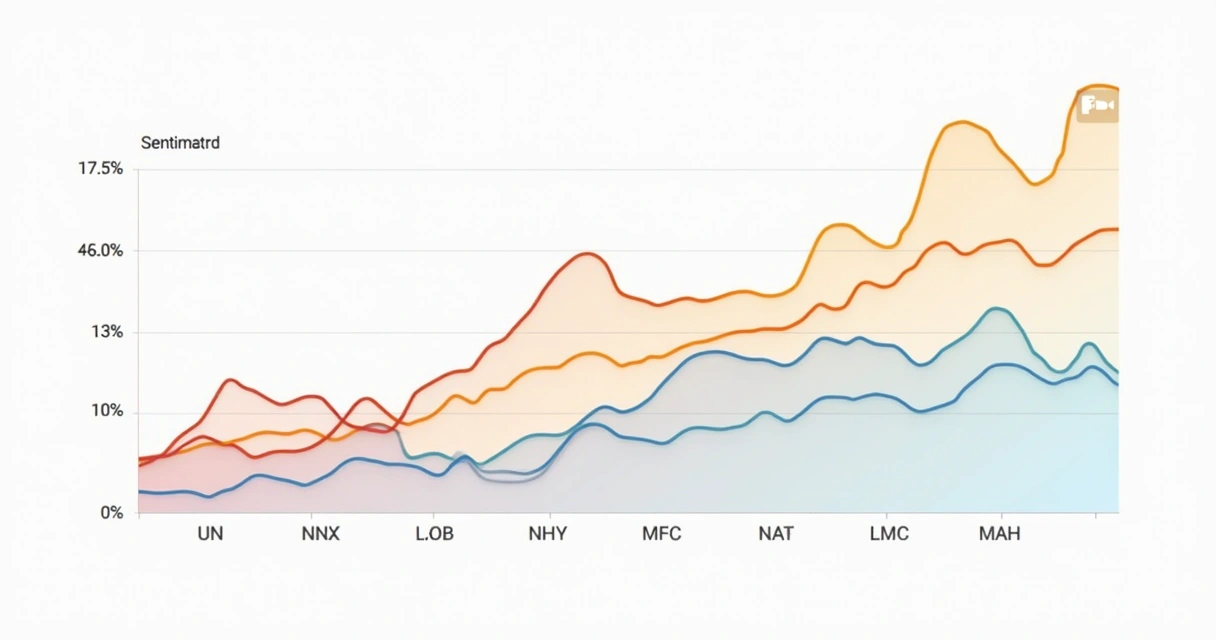

5. Is the sentiment aligned with reality?

One of the most surprising things I’ve noticed is how LLMs can swing from glowing praise to subtle criticism—sometimes for no reason I can identify. The underlying tone impacts brand reputation in ways you might not expect. If an LLM answers that your product is “outdated” or “market-leading,” it influences perception even when the facts are muddied.

By tracking sentiment over time (something I do often through getmiru.io), I can catch when brand image drifts in online conversations or model outputs. This helps in aligning my messaging and correcting negative trends fast.

6. What do real users see versus what admins see?

Here’s something I realized when auditing LLM answers: sometimes, the output in a logged-in dashboard view is not the same as what end users see when querying a chatbot. I remember spending hours finding that a positive review was “hidden” from public results. This is key for transparency.

If you monitor your brand’s digital reputation—or want to learn—make sure to check both logged-in (admin) and anonymous (user) perspectives. This is especially relevant for marketing and for areas like product monitoring.

7. How does the model compare you to others?

Every time I run competitor intelligence, I see that LLMs often set brands in direct comparison, and the criteria for these comparisons can be outdated or arbitrary. If the language model says your brand is “the leading choice” next to another, ask: is that based on data, popular opinion, or something the model inferred without evidence?

The weight of AI-generated comparisons can shape user choices even more than direct advertising. I always want to understand—and if needed, correct—the context of these comparisons.

8. How can you act on AI-generated insights?

This is the last and perhaps the most actionable question. After checking all above, I ask myself: what next? Can I contact the source, fix incorrect website content, issue a press release, or request a correction? If the LLM output is negative or wrong, do I have systems to update data or push the right story out?

Monitoring tools like getmiru.io give me an early warning when things go wrong and the means to affect change quickly. It’s become a must in my digital toolkit.

Putting these questions into action

Each of these questions can make the difference between reacting to an AI error months later, or staying one step ahead. Over the years, applying them has saved me from PR headaches and lost customers. When in doubt, I turn to reputable sources and digital monitoring platforms that help keep all my data checked and up-to-date. I also recommend keeping an eye on trends in artificial intelligence, digital reputation, marketing, and monitoring to see how these topics evolve.

Remember, as AI-generated answers become more common in “first impression” searches, the cost of not knowing what’s said about your brand will only rise. Explore competitor mention tracking, and consider partnering with purpose-built monitoring solutions like getmiru.io to future-proof your brand reputation. To see what LLMs are really saying about you, try getmiru.io—be proactive, not reactive.

Frequently asked questions

What is an LLM insight?

An LLM insight is an observation, summary, or piece of information produced by a large language model (LLM) like ChatGPT, Gemini, or Claude about a topic or brand, based on its training data and algorithms. These insights can include answers to direct questions, summarizations, sentiment analysis, and even side-by-side comparisons.

How reliable are LLM brand insights?

Reliability varies. Studies show LLMs can achieve high accuracy in structured tasks, such as in recent clinical research (objective clinical research questions). However, they can also produce hallucinations, outdated info, or inconsistent comparisons, especially in less regulated contexts. Always validate before acting.

How can I verify LLM information?

Check citations if available, compare answers across different LLMs, and cross-reference with your official or trusted industry sources. Using monitoring tools (like getmiru.io) helps keep tabs on what’s being said, while manual checking helps separate fact from fiction. Never accept LLM outputs at face value without investigation.

Is it worth it to trust LLMs?

LLMs can provide fast, broad, and sometimes very accurate insights, but blind trust is risky. Use them as one tool among many, while applying critical thinking and using monitoring solutions to protect your brand.

What questions to ask about LLM data?

Ask: What is the source of this data? How recent is it? Is the information consistent across multiple models? Are there signs of hallucination or fabrication? Does sentiment align with reality? How are brand comparisons drawn? Who sees which version of the results? And finally, what can you do if the information is wrong or outdated?